AI Scribes are not yet productivity tools

Two years into AI scribes: What the latest clinical trials reveal and why vertical healthcare infrastructure matters more than ever.

NEJM AI has just published the first two randomized controlled trials evaluating AI scribing technologies in real clinical settings. The studies examined three commercially available, application-layer scribes (Abridge, DAX Copilot, and Nabla) across two health systems in English-language outpatient settings, providing the first controlled evidence on how today’s scribing tools affect clinician workload, documentation time, and burnout.

The results are an important milestone for the field. They also expose the limits of current approaches and reinforce a growing distinction: the gap between the ambition of ambient scribes to be platforms that reduce burnout and serve as the productivity levers that clinicians and health systems need and the reality that these point solution tools are not yet productivity platforms. Either because clinicians spend hours correcting hallucinated outputs from scribes built on generalist infrastructure, or because the foundation these products are built on isn't yet capable enough to expand into more specialties (driving broader adoption) or power the downstream workflows that would augment processes with AI and deliver greater ROI.

Performance gaps start at the infrastructure layer

As demand for ambient scribing accelerates, the architecture beneath each product now determines whether AI ambient scribes deliver on their ROI promise.

What we now know

The studies assessed the amount of time clinicians saved, the reduction in burnout, and the consistency of note production. Three key findings emerged:

- Time savings were real, but modest.

Across the three products tested, clinicians saved 7–22 minutes per day post encounter, depending on specialty and workflow. The authors noted that the upper range may be overstated due to reliance on EHR activity logs.

- Burnout reduction was consistent.

All three products reduced documentation distress and after-hours work- one of the clearest signals in the data.

- No evidence yet that scribes “pay for themselves”

Whether through increased patient throughput or margin expansion throughout the care deliver journey, the case for real ROI is still hard to make. However, the clinical and operational benefits: reduced task load, more predictable documentation, fewer after-hours chores are meaningful.

What the trials did not assess is equally important

The studies measured outputs (time saved, burnout reduced) but not the why behind the modest gains. They didn't compare underlying speech to text quality, medical reasoning, grounding mechanisms, or model-level accuracy across vendors. These architectural components are what create divergence in performance most sharply in production.

At Corti, through our work with thousands of developers building voice-assisted AI applications for healthcare, we know what happens when these foundations are weak. Builders come to us with applications built on generalist infrastructure, stitched together with brittle prompt stacks, creating engineering headaches and end-user frustration when outputs stray from the truth of the clinical visit. When applications with those foundations go into production, clinicians lose trust, developers spend cycles patching hallucinations, and ultimately, health systems see adoption stall because the tools can't scale beyond narrow use cases or reliably support downstream workflows that drive real RPI gains.

We believe that the ceiling to drive the transition from point solutions to productivity platforms lies in the infrastructure layer.

Why architecture explains the performance ceiling

The three tools evaluated share a common approach: they assemble an application using general-purpose models for speech to text and text generation. This pattern imposes structural limits:

- General ASR struggles with specialty vocabulary, overlapping speakers, and healthcare-specific commands required for precise dictation workflows.

- General LLMs rely on pattern completion rather than clinical reasoning.

- Hallucination mitigation is bolted on through heuristics, not native constraint systems.

- Template-based summarization leads to inconsistent completeness and drift.

These weaknesses were evident in the trials through limited time savings and variable improvements in task load.

They’re also evident in broader industry data. According to our First AId Report earlier this year, nearly 30% of U.S. clinicians using general-purpose AI systems spend 1–3 hours per week correcting AI-generated errors- a direct cost on clinician time and attention that erodes the intended efficiency gains.

The message is consistent: clinical documentation cannot rely on general AI.

Healthcare language is structured, hierarchical, contextual, and safety-critical. Infrastructure is where performance and reliability are determined.

An architectural solution: Vertical infrastructure as the foundation

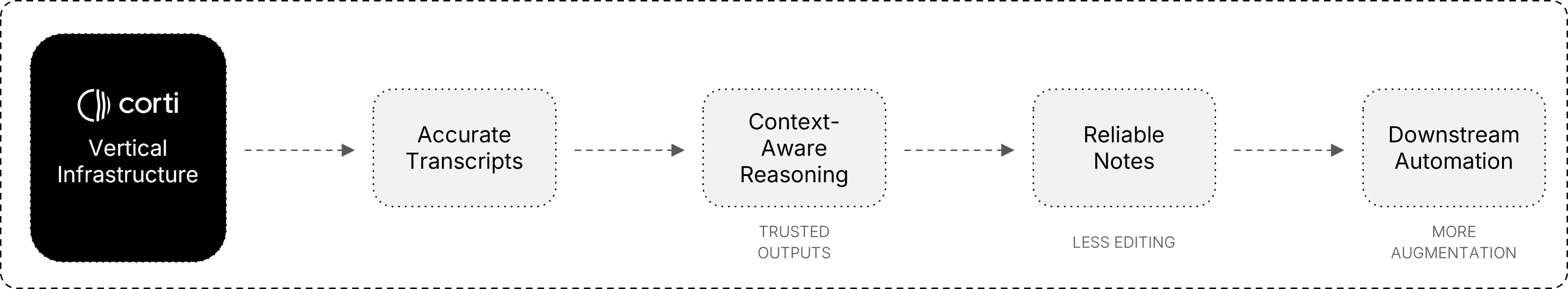

Corti adopts a fundamentally different approach. We enable developers to build clinical-grade applications using healthcare-native AI infrastructure embedded directly into their own products. The structural limitations of connecting generalist infrastructure? We’ve solved for that at Corti.

- Vocabulary: Our proprietary speech-to-text models are trained exclusively on clinical audio and validated against one of the industry's largest medical lexicons. We achieve higher medical term recall because our models learn from real encounters across specialties, not consumer conversations or general internet data. Specialty vocabulary, drug names, anatomical terms, and procedure codes are captured accurately from the start, not corrected after the fact.

- Clinical reasoning: Our FactsR recursive reasoning engine, accessible for both async and synchronous workflows, uses multi-step processing to optimize text generation quality. Instead of a single LLM with one input-output cycle, FactsR isolates clinical findings through structured extraction before generating documentation.

- Hallucination mitigation: We implement validation layers built into the infrastructure itself. Corti combines its FactsR recursive reasoning model with a Coarse-to-Fine output method to minimize hallucination risk. This multi-step approach ensures output is accurate and aligned to each request, rather than relying on a single LLM with one input-output cycle.

When the foundation is built for healthcare, performance improvements compound. Accurate transcripts enable context-aware reasoning. Reliable reasoning produces clinically complete and reliable notes. Complete notes require less editing. Less editing means clinicians trust the output. Trusted outputs enable downstream automation like coding, referrals, care coordination.

What this means for application builders and the healthcare ecosystem.

Selling an ambient scribe gets you in the door. What will drive real ROI for your customers is a highly accurate scribe capable of being adopted beyond the simple outpatient visit, reaching high penetration within provider populations that have complex workflows, patients, and specialties.

The NEJM AI results confirm that ambient scribing is no longer experimental. It reduces burnout and simplifies documentation across settings. But the results also highlight that the current generation of application-layer scribes built on generalist infrastructure is not the final form.

Ambient scribes will evolve from point solutions to platforms, powering downstream administrative automation like medical coding and CDI, and clinical automation like order recommendations and prior authorization. The infrastructure you build on determines whether you can make that transition or remain constrained to narrow use cases.

The scribe is your first feature, not your last. When you're ready to add medical coding, prior auth automation, or clinical decision support, you're building on the same foundation. Same speech to text pipeline. Same reasoning engine. Same agent framework. No stitching together different vendors.

You have to pick a starting point. Building on Corti means you don't get stuck there.

Don't get stuck as a point solution. Build on infrastructure that enables you to evolve into a platform.

Start building on our vertical infrastructure today: docs.corti.ai

Join our mission

We believe everyone should have access to medical expertise, no matter where they are.

.png)