Introducing FactsR™: The thinking engine behind better clinical AI

How we taught our AI to think like a clinician

The limits of summarization-only AI

Most clinical scribes today rely on summarization, not understanding. These AI Scribes follow a familiar pattern: capture the full consultation, pass it to a large language model, and generate a note after the visit. It sounds efficient, but this one-shot summarization approach is fragile.

Notes are often verbose, incomplete, or hallucinated. Critical nuance gets lost. Clinicians are left reviewing a black-box output, unable to see or correct how the note was formed. There’s no interaction, no validation, and no opportunity to course-correct during the visit.

In real-world rollouts, almost one-third of users report spending up to three extra hours per week correcting AI-generated notes. What was meant to lighten the load started piling on new chores – an unwelcome era of "note bloat" suddenly arrived.

“Did the AI get this right? What did it miss? Can I trust it?”

These are the questions clinicians are left asking.

So our Machine Learning team set out to design a system that doesn’t just summarize after the fact, but reasons through the visit in real time. One that mirrors how clinicians reason: gathering facts as they emerge, evaluating their relevance, and constructing a note that reflects both what was said and what matters. We believe the real potential of AI in healthcare can be unlocked by better AI systems (see here), and FactsR is designed to enable just that.

How clinical note generation has evolved

Reasoning is how clinicians work. So why doesn’t AI?

Clinicians don’t summarize, they reason. They gather facts, assess what matters, and synthesize meaning in real time. This is structured clinical reasoning, and it’s foundational to safe, effective care. So when we set out to reimagine clinical documentation, we asked:

What if AI could reason like a clinician — not just write like one?

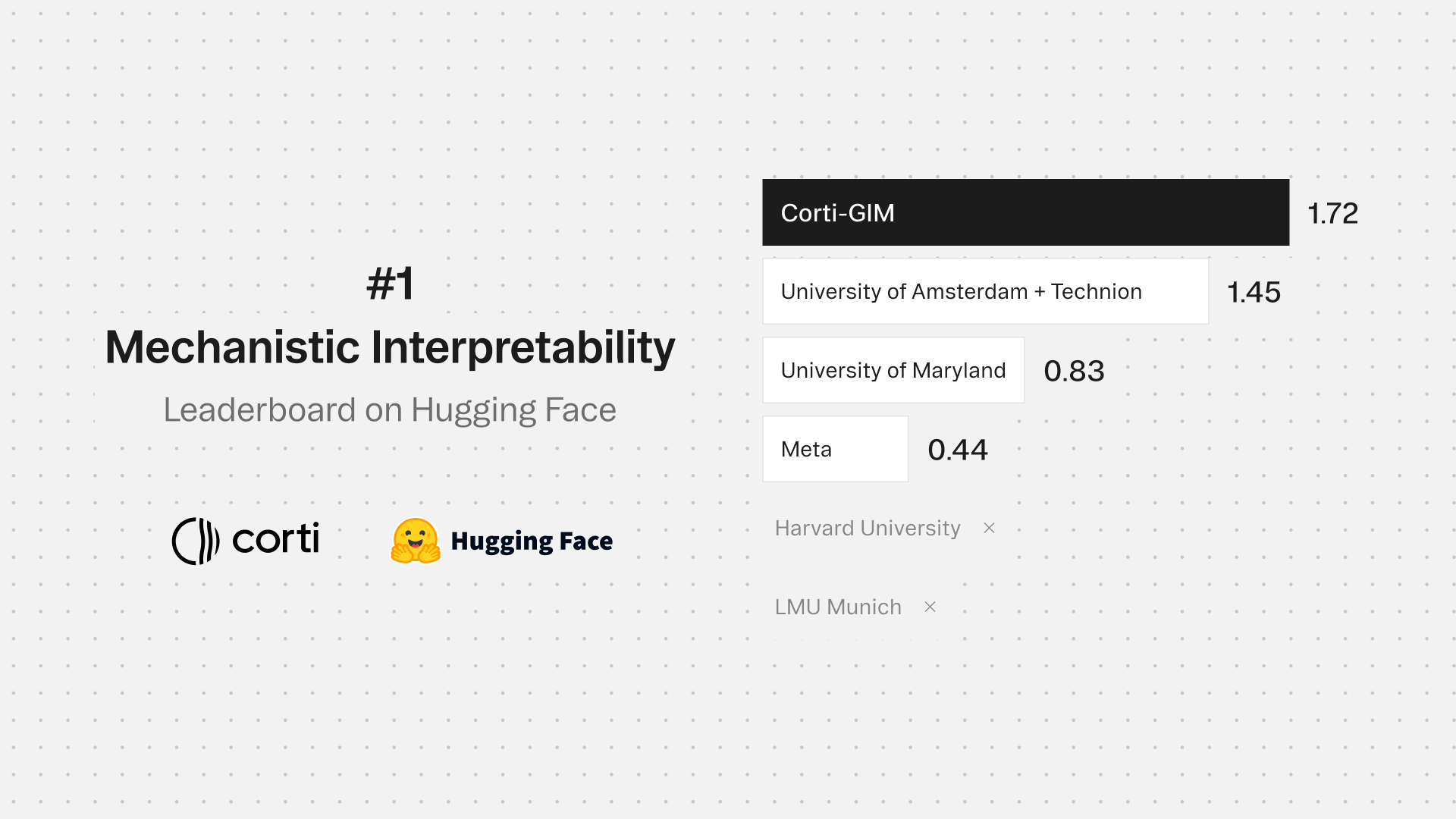

At Corti, we had the right foundation to answer that. We've built our own proprietary foundation models, trained specifically on the complexity of clinical dialogue. Because we own our full stack, we weren't limited by brittle, general-purpose models or simplistic prompt engineering. We could build for clinical reasoning from first principles.

That gave us the ability — and the responsibility — to build something fundamentally better. And we didn’t want AI that mimics clinical language. We wanted AI that mirrors clinical thinking and that philosophy shaped every decision behind the system we built.

From summarizing to reasoning: A better brain for clinical AI

FactsR is our breakthrough in clinical reasoning infrastructure - a modular framework for use cases including clinical documentation that thinks step by step, just like a clinician. Rather than summarizing the full conversation in one go, FactsR breaks the task into three core stages:

- Extract – Pull out clinically relevant facts in real time as the conversation unfolds.

- Refine – Evaluate each fact using specialized reasoning agents to ensure accuracy, structure, and clinical relevance.

- Compose – Build the final note using only validated facts, structured according to standard formats like SOAP.

This architecture doesn’t so much more than improve output quality, it transforms the documentation process. Clinicians can see, verify, and shape the facts as they’re captured, making the experience interactive, explainable, and fully in their control.

The results speak for themselves. Head-to-head against a strong few-shot scribe on the public Primock57 benchmark, FactsR delivers:

- 49% reduction in missing clinically relevant content (completeness errors drop from 23.3% to 11.7%)

- 86% reduction in extraneous detail (conciseness errors fall from 14.3% to 2.0%)

- Groundedness remains high at 94%, comfortably above clinician reference notes

In plain language: more of what matters, far less of what doesn't.

And that control matters. As AI becomes more embedded in clinical workflows (see more here) trust and clinical outcomes become the true benchmarks of performance — not just BLEU scores or latency. OpenAI’s new Health Bench highlights this shift: the industry is moving toward evaluating AI agents based on their real-world utility, not just how fluent they sound.

FactsR reduces cognitive load, without sacrificing control or trust

(1) In enterprise healthcare AI procurement, “trust and outcomes matter most” link

Building the Foundation for Better Clinical AI

We built FactsR to raise the bar for clinical documentation, but it represents something bigger: our approach to building clinical AI infrastructure that developers can actually trust and build upon. Whether you're building an ambient scribe, a digital front door, or patient-facing agents, FactsR demonstrates the kind of clinical-grade reasoning we're making accessible via our platform. It’s a foundation for safer, smarter workflows across healthcare.

So, if you’re building:

- An ambient scribe that needs real-time, clinician-in-the-loop reasoning to reduce hallucinations and improve accuracy

- Conversational agents that must extract structured, clinically salient content from noisy or unstructured dialogue

- Pioneering tools we haven’t imagined yet, and need a clinically grounded thinking engine to bring them to life

Then FactsR can be your foundation. We’re looking for builders, partners, and pioneers ready to bring clinician-grade reasoning into every corner of healthcare.

► Start learning: docs.corti.ai.

► Start building: console.corti.app.

How we taught our AI to think like a clinician

The limits of summarization-only AI

Most clinical scribes today rely on summarization, not understanding. These AI Scribes follow a familiar pattern: capture the full consultation, pass it to a large language model, and generate a note after the visit. It sounds efficient, but this one-shot summarization approach is fragile.

Notes are often verbose, incomplete, or hallucinated. Critical nuance gets lost. Clinicians are left reviewing a black-box output, unable to see or correct how the note was formed. There’s no interaction, no validation, and no opportunity to course-correct during the visit.

In real-world rollouts, almost one-third of users report spending up to three extra hours per week correcting AI-generated notes. What was meant to lighten the load started piling on new chores – an unwelcome era of "note bloat" suddenly arrived.

“Did the AI get this right? What did it miss? Can I trust it?”

These are the questions clinicians are left asking.

So our Machine Learning team set out to design a system that doesn’t just summarize after the fact, but reasons through the visit in real time. One that mirrors how clinicians reason: gathering facts as they emerge, evaluating their relevance, and constructing a note that reflects both what was said and what matters. We believe the real potential of AI in healthcare can be unlocked by better AI systems (see here), and FactsR is designed to enable just that.

How clinical note generation has evolved

Reasoning is how clinicians work. So why doesn’t AI?

Clinicians don’t summarize, they reason. They gather facts, assess what matters, and synthesize meaning in real time. This is structured clinical reasoning, and it’s foundational to safe, effective care. So when we set out to reimagine clinical documentation, we asked:

What if AI could reason like a clinician — not just write like one?

At Corti, we had the right foundation to answer that. We've built our own proprietary foundation models, trained specifically on the complexity of clinical dialogue. Because we own our full stack, we weren't limited by brittle, general-purpose models or simplistic prompt engineering. We could build for clinical reasoning from first principles.

That gave us the ability — and the responsibility — to build something fundamentally better. And we didn’t want AI that mimics clinical language. We wanted AI that mirrors clinical thinking and that philosophy shaped every decision behind the system we built.

From summarizing to reasoning: A better brain for clinical AI

FactsR is our breakthrough in clinical reasoning infrastructure - a modular framework for use cases including clinical documentation that thinks step by step, just like a clinician. Rather than summarizing the full conversation in one go, FactsR breaks the task into three core stages:

- Extract – Pull out clinically relevant facts in real time as the conversation unfolds.

- Refine – Evaluate each fact using specialized reasoning agents to ensure accuracy, structure, and clinical relevance.

- Compose – Build the final note using only validated facts, structured according to standard formats like SOAP.

This architecture doesn’t so much more than improve output quality, it transforms the documentation process. Clinicians can see, verify, and shape the facts as they’re captured, making the experience interactive, explainable, and fully in their control.

The results speak for themselves. Head-to-head against a strong few-shot scribe on the public Primock57 benchmark, FactsR delivers:

- 49% reduction in missing clinically relevant content (completeness errors drop from 23.3% to 11.7%)

- 86% reduction in extraneous detail (conciseness errors fall from 14.3% to 2.0%)

- Groundedness remains high at 94%, comfortably above clinician reference notes

In plain language: more of what matters, far less of what doesn't.

And that control matters. As AI becomes more embedded in clinical workflows (see more here) trust and clinical outcomes become the true benchmarks of performance — not just BLEU scores or latency. OpenAI’s new Health Bench highlights this shift: the industry is moving toward evaluating AI agents based on their real-world utility, not just how fluent they sound.

FactsR reduces cognitive load, without sacrificing control or trust

(1) In enterprise healthcare AI procurement, “trust and outcomes matter most” link

Building the Foundation for Better Clinical AI

We built FactsR to raise the bar for clinical documentation, but it represents something bigger: our approach to building clinical AI infrastructure that developers can actually trust and build upon. Whether you're building an ambient scribe, a digital front door, or patient-facing agents, FactsR demonstrates the kind of clinical-grade reasoning we're making accessible via our platform. It’s a foundation for safer, smarter workflows across healthcare.

So, if you’re building:

- An ambient scribe that needs real-time, clinician-in-the-loop reasoning to reduce hallucinations and improve accuracy

- Conversational agents that must extract structured, clinically salient content from noisy or unstructured dialogue

- Pioneering tools we haven’t imagined yet, and need a clinically grounded thinking engine to bring them to life

Then FactsR can be your foundation. We’re looking for builders, partners, and pioneers ready to bring clinician-grade reasoning into every corner of healthcare.

► Start learning: docs.corti.ai.

► Start building: console.corti.app.