What is an AI Agent? Core concepts explained

TL;DR

An agent is an LLM that reasons about how to solve problems using tools, rather than answering directly. It receives a task, decides what tools to call, interprets the results, and continues until the task is complete. Think of it as an LLM with a to-do list it writes for itself.

This piece covers:

- The core distinction between chatbots and agents

- How the LLM-tool loop works at a technical level

- A heuristic for when to use agents versus traditional code

- Simple and complex examples of agents in action

- Why agents are well-suited for healthcare workflows

The core distinction

A chatbot generates text. You ask it a question, and it produces an answer based on what it learned during training. Input in, output out.

An agent works differently. Give it a task, and it reasons about how to solve the problem. It breaks the task into steps, identifies what information it needs, selects the right tools, and executes a plan. The agent does not answer from memory. It figures out what to do, does it, and uses the results to decide what to do next.

.png)

One way to think about it: a chatbot retrieves or generates. An agent plans and executes.

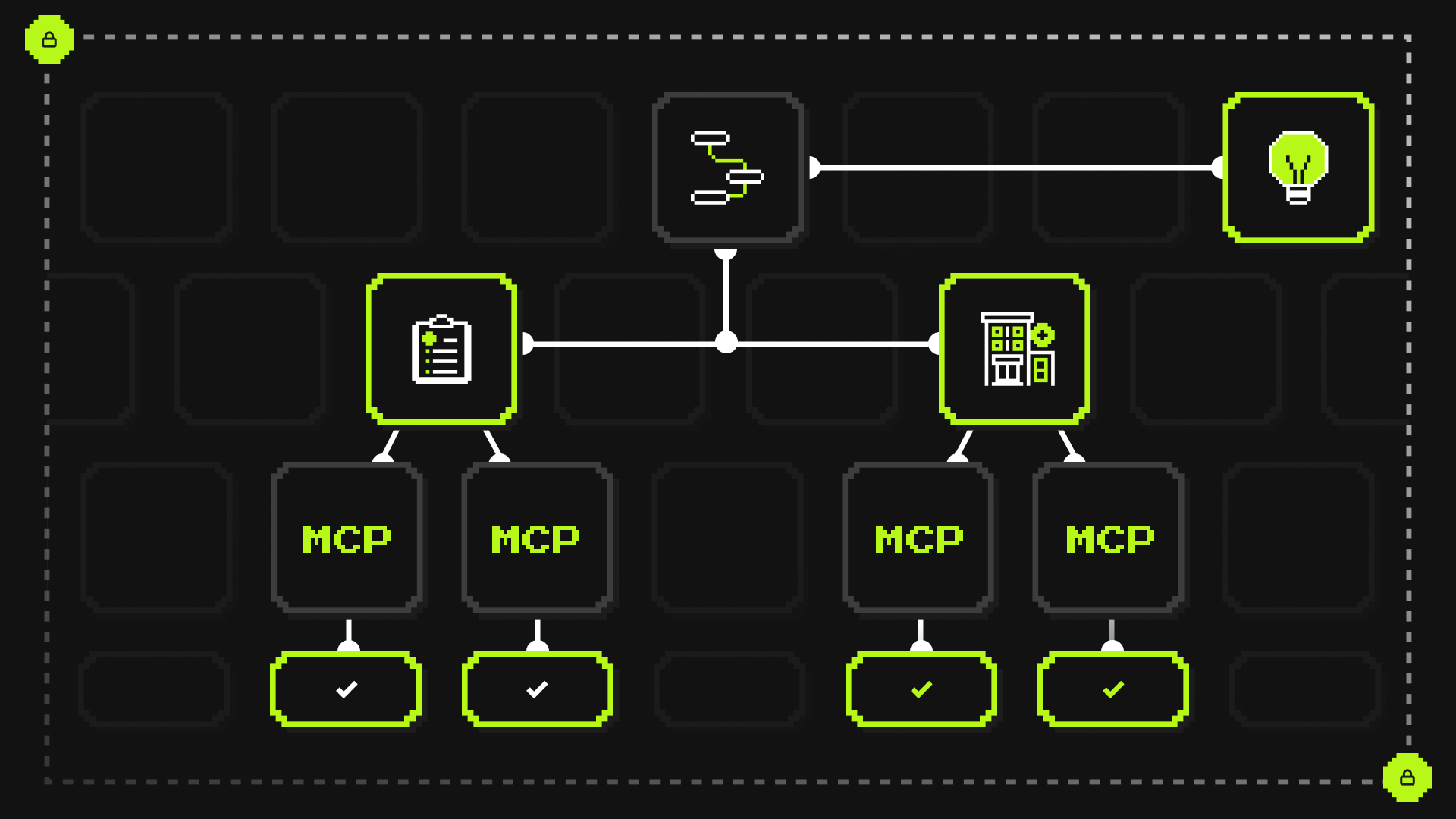

The anatomy of an agent

At a technical level, an agent combines an LLM with access to external tools. The LLM handles reasoning. The tools handle interaction with external systems: databases, APIs, EHRs, scheduling platforms, and other services the LLM cannot reach on its own.

This creates a loop:

- The agent receives a task and a list of available tools (functions it can call).

- Instead of generating a final answer, the LLM generates a tool call: a function name and arguments.

- The system executes the tool and returns the result to the LLM.

- The LLM evaluates the result and decides whether to call another tool or return a final response.

- The loop continues until the task is complete.

The LLM never performs the task itself. It reasons about what needs to happen, delegates execution to tools, and synthesizes the results.

When to use an agent

A useful heuristic: if you can draw the decision tree in advance, write code. If the input and output are variable and the path depends on what you learn along the way, use an agent.

Agents excel when inputs vary in structure, when the right set of steps depends on intermediate results, and when outputs require synthesis rather than simple lookup. They add unnecessary complexity when inputs are fixed, decision trees are deterministic, and outputs must conform to strict schemas.

The combination of grounded reasoning and controlled action is what makes agents suited for production environments where accuracy matters.

A simple example

Consider the question: "What allergies does this patient have?"

A chatbot would attempt to answer from its training data, which contains no information about this specific patient. It might hedge, refuse, or worse, hallucinate something plausible.

An agent handles this differently:

- The LLM receives the query along with a list of available tools (for example,

search_ehr,search_transcript). - The LLM generates a tool call:

search_ehr(patient_id=123, query="allergies"). - The system executes the query and returns:

["Penicillin", "Sulfa"]. - The LLM generates a final response: "The patient is allergic to Penicillin and Sulfa."

The agent did not guess. It looked up the answer from an authoritative source.

A more complex example

Agents become more valuable when tasks require multiple steps and judgment calls along the way. Consider the question: "Is this patient ready for discharge?"

A static workflow would require mapping every possible branch in advance. An agent reasons through it dynamically:

- Check vital signs. Are they stable?

- Check pending labs. Are any results outstanding?

- Check medication reconciliation. Is it complete?

- Check follow-up appointments. Are they scheduled?

- Synthesize findings into a recommendation.

The specific sequence depends on what the agent learns at each step. If vital signs are unstable, the answer is immediate. If everything looks good but follow-up appointments are missing, the agent flags that specific gap. The path through the task emerges from the data, not from a predetermined decision tree.

Agents in healthcare

Healthcare tasks often require pulling from multiple sources: EHRs, lab systems, imaging, clinical guidelines, payer requirements. The right sequence of steps depends on the specific query and what data exists. Hard-coding every possible path is brittle and expensive to maintain.

More importantly, an LLM generating responses from its own training knowledge alone creates risk in clinical settings. What if the information is outdated? What if the model hallucinates plausible but incorrect details?

Agents address this by design. Instead of inferring a patient's medication history, a well-designed agent queries the EHR. Instead of guessing at coding requirements, it looks them up. The reasoning is grounded in data retrieved at runtime from authoritative sources.

Beyond retrieval, clinical workflows often require action: drafting documentation, preparing orders, triggering downstream processes. Agents provide a controlled execution layer where these actions happen within defined boundaries, with the ability to pause for human review when needed.